I created this tutorial because I was surprised at the lack of documentation and support for audio programming using the Cinder framework. The tutorial is split up into three parts:

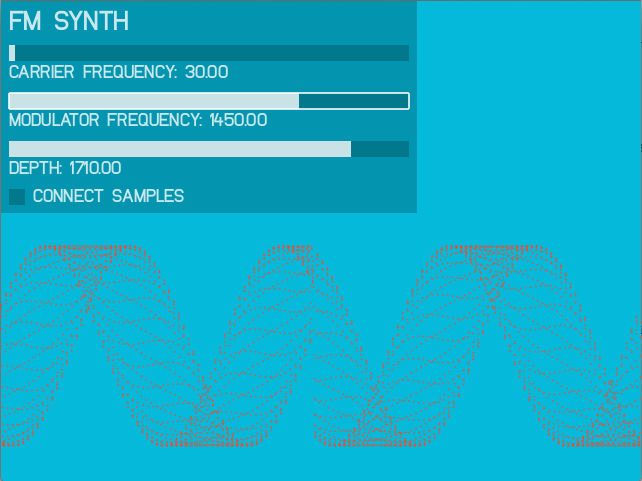

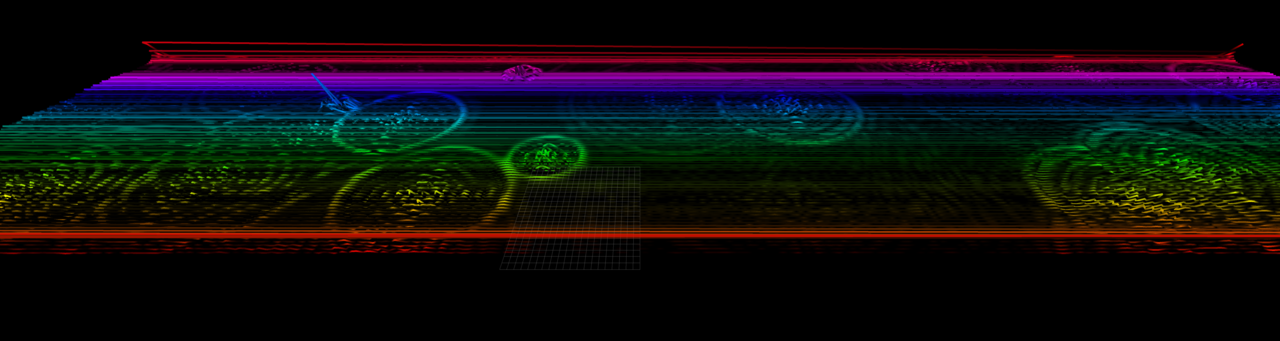

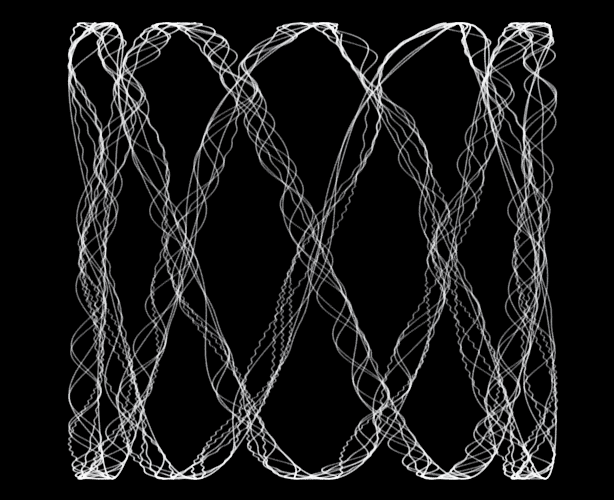

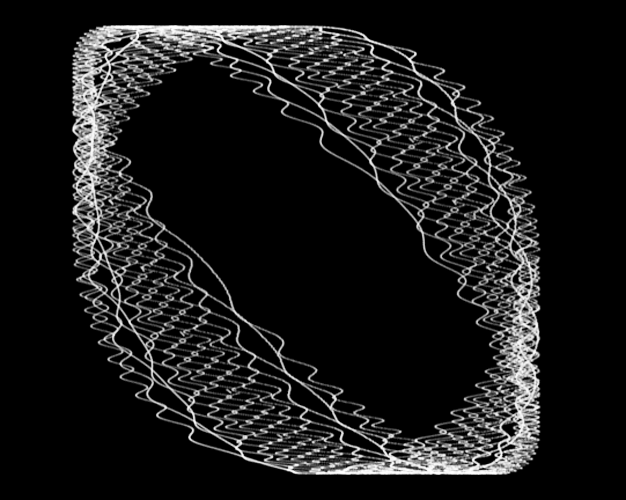

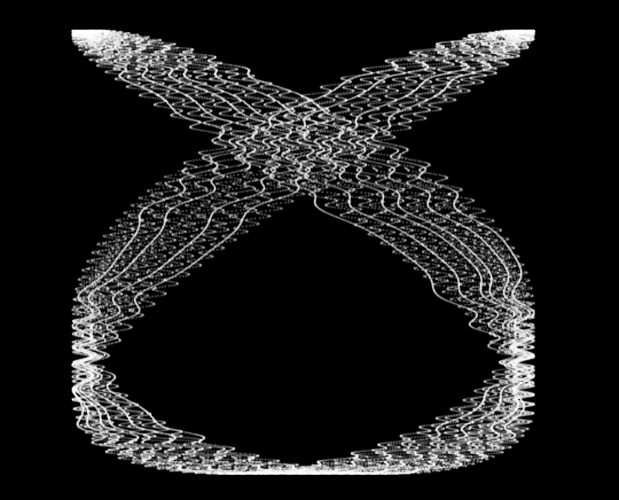

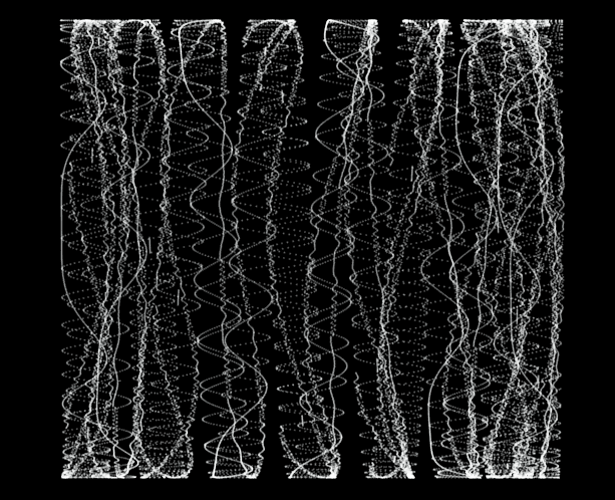

Part one explains how to download Cinder, set up a basic application, add an audio callback to the project and use it to output a sine wave. Part two describes how to add the Gamma synthesis library to Cinder and demonstrates how it can be used to set up a simple FM synth. Part three shows how to build an interface for this synthesizer using the ciUI library. It ends with the creation of a simple waveform visualizer, leaving us with a nice little audio/visual instrument app. Here’s a preview of the final result:

Part I: Installing Cinder and Creating an Audio Callback

1. Download and Install the latest version of Cinder from here.

2. Open up Tinderbox inside of the Cinder/tools folder. This helps us to create a new Cinder project. Make sure you use these settings:

Target: Basic App (default)

Template: OpenGL (default)

Project Name: CinderGamma

Then decide where you want to put it and use Xcode as the compiler. Click “Create” and it will create a folder called CinderGamma with a bunch of stuff in it.

3. Inside of this folder, expand the xcode folder and double click on the xcode project. If everything worked out once Xcode opens you should be able to run the Xcode project which should launch a simple Cinder application with a plain black screen. If this part isn’t working, check your build settings to make sure that your “Base SDK” is set to “Latest OS X…” and not something weird because I’ve had problems with this in the past.

4. Now that the basic app is building and running, we have access to a huge amount of graphics and interface tools. For instance, we can easily change the background color by altering the RGB color amounts in the draw function in our main program file, CinderGammaApp.cpp:

{

// clear out the window with blue instead of black

gl::clear( Color( 0, 0, 0.7 ) );

}

But this tutorial is about sound, not graphics so let’s get started. First we need to include two header files that allow audio output and callback loops.

#include "cinder/audio/Callback.h"

Then we need to add a callback prototype and two floats to our CinderGammaApp class.

float phaseIncrement;

float phase;

Then initialize the callback in our setup function.

{

audio::Output::play( audio::createCallback( this, &CinderGammaApp::myAudioCallback ) );

}

And finally we need to implement our actual audio callback.

{

// frequency over sample rate times two_pi

phaseIncrement = (300.0/44100.0)*(float)M_PI*2.0;

for ( uint32_t i = 0; i < ioSampleCount; i++ ) {

phase += phaseIncrement;

float tempVal = math::sin(phase);

ioBuffer->mData[ i * ioBuffer->mNumberChannels ] = tempVal;

ioBuffer->mData[ i * ioBuffer->mNumberChannels + 1 ] = tempVal;

//prevent weird overflow problems

if (phase >= (float)M_PI*2.0)

phase -= ((float)M_PI*2.0);

}

}

5. Now we have a simple app which opens and plays a 300.0 hz sine tone. The complete code is posted below for your convenience. Our next step will be to add the Gamma synthesis library which has a staggering number of signal processing tools that we can use to make awesome sounds.

Complete program:

#include "cinder/gl/gl.h"

#include "cinder/audio/Output.h"

#include "cinder/audio/Callback.h"

using namespace ci;

using namespace ci::app;

using namespace std;

class CinderGammaApp : public AppBasic {

public:

void setup();

void mouseDown( MouseEvent event );

void update();

void draw();

void myAudioCallback( uint64_t inSampleOffset, uint32_t ioSampleCount, ci::audio::Buffer32f * ioBuffer );

private:

float phaseIncrement;

float phase;

};

void CinderGammaApp::setup()

{

audio::Output::play( audio::createCallback( this, & CinderGammaApp::myAudioCallback ) );

}

void CinderGammaApp::mouseDown( MouseEvent event )

{

}

void CinderGammaApp::update()

{

}

void CinderGammaApp::draw()

{

// clear out the window with blue

gl::clear( Color( 0, 0, 0.7 ) );

}

void CinderGammaApp::myAudioCallback( uint64_t inSampleOffset, uint32_t ioSampleCount, audio::Buffer32f * ioBuffer )

{

// frequency over sample rate times two_pi

phaseIncrement = (300.0/44100.0)*(float)M_PI*2.0;

for ( uint32_t i = 0; i < ioSampleCount; i++ ) {

phase += phaseIncrement;

float tempVal = math::sin(phase);

ioBuffer->mData[ i * ioBuffer->mNumberChannels ] = tempVal;

ioBuffer->mData[ i * ioBuffer->mNumberChannels + 1 ] = tempVal;

//prevent weird overflow problems

if (phase >= (float)M_PI*2.0)

phase -= ((float)M_PI*2.0);

}

}

CINDER_APP_BASIC( CinderGammaApp, RendererGl )

Music

Music