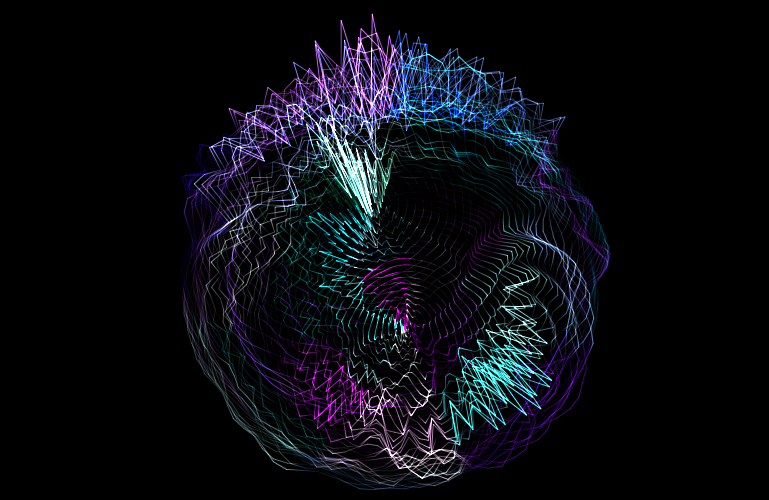

Under the name “Echobit,” Brian Hansen and I have been performing immersive VJ sets and audio-visual experiments using the Oculus Rift. We apply audio feature extraction and MIR techniques in order to create rich, interactive visuals. Users are able to explore visual worlds while they react to musical material in real time. The visuals are also projected on the wall so that all audience members can all take part in the experience.

I believe it’s the first application of Virtual Reality technology as applied to VJing and music visualization in general.

It is built in OpenFrameworks using a custom system for generating audio-reactive geometry and GLSL shaders.

Music

Music